The Great AI Image Model Reset: DALL·E, Stable Diffusion, Imagen — and What It Means for Creators Using Tools Like Artistly

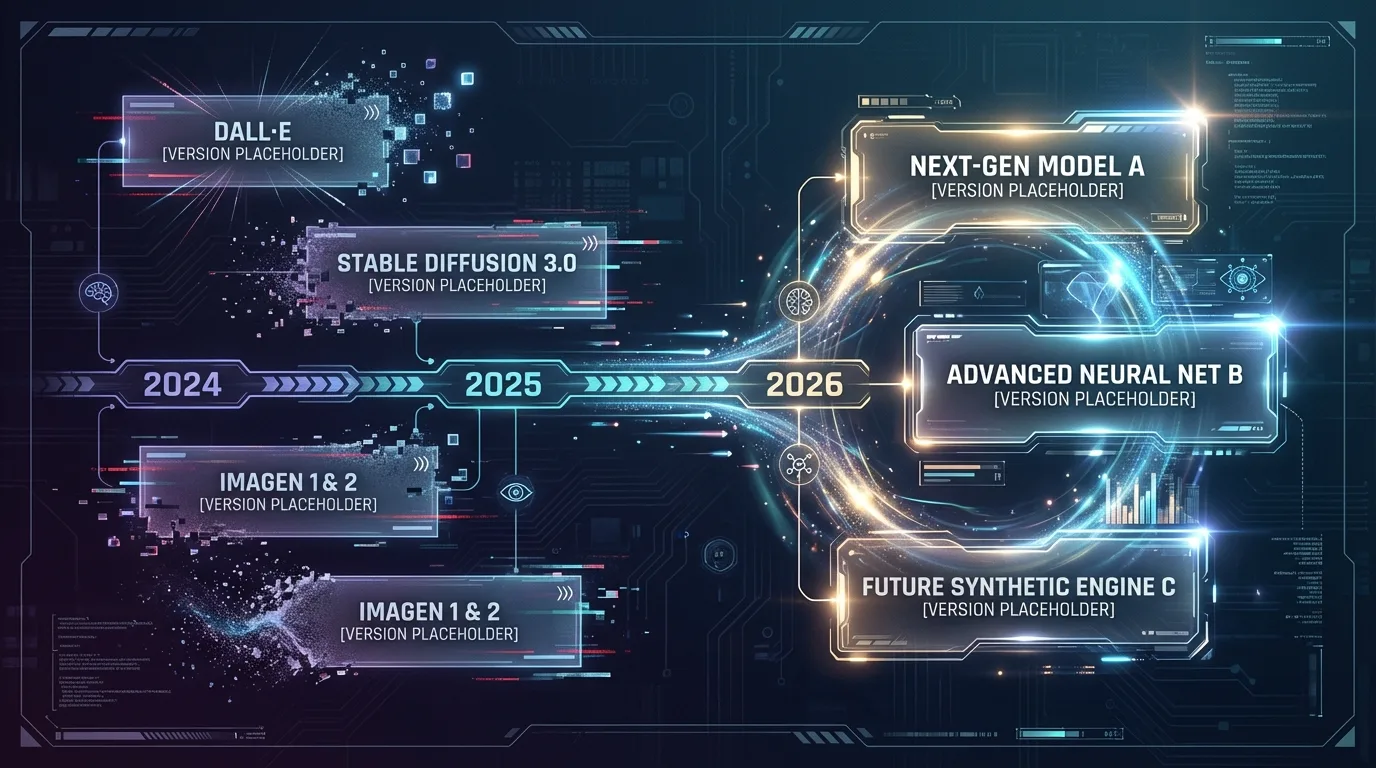

Over the past year, major AI companies have quietly deprecated or replaced flagship image models — including DALL·E 2 & 3, Stable Diffusion 3.0 APIs, and Google’s Imagen 1 & 2. While framed as upgrades, these changes have disrupted creative workflows, altered visual styles, and sparked user frustration — especially for creators relying on downstream platforms like Artistly.

The result? A subtle but powerful shift in who controls digital aesthetics.

TL;DR

- OpenAI is deprecating DALL·E 2 and 3 API snapshots in favor of GPT-Image models.

- Stability AI deprecated Stable Diffusion 3.0 APIs to push 3.5.

- Google removed Imagen 1 & 2 and even sunset certain fine-tuning features.

- Creators report style drift, quality regressions, and broken workflows.

- Artistly users raise concerns about comment moderation, feature clarity, refunds, and quota enforcement.

- The bigger issue isn’t one company — it’s systemic model churn reshaping creative control.

There’s a pattern here.

It’s not loud. It doesn’t come with a press conference.

But it’s changing how creative work gets made.

Let’s unpack it.

Why Are Major Image Models Being Deprecated So Quickly?

In the last 12 months, three of the biggest players in generative AI have removed or scheduled removal of cornerstone image models:

OpenAI: From DALL·E to GPT-Image

OpenAI’s official deprecation notice confirms that DALL·E 2 and 3 model snapshots will be removed from the API on May 12, 2026. Developers were notified November 14, 2025.

In practice, though, many users already feel the transition happened.

Inside ChatGPT, image generation has moved toward GPT-Image-1 and GPT-Image-1.5. Community threads describe the change as abrupt. Some artists argue the new model produces flatter materials, repeating noise patterns, and weaker local contrast compared to DALL·E 3.

One recurring theme:

“I can’t reproduce the style I built my portfolio around.”

That’s not a small complaint.

For a hobbyist, it’s frustrating.

For a commercial illustrator? It’s revenue.

OpenAI presents this as model evolution. Creators experience it as personality replacement.

Stability AI: SD3.0 → 3.5 in Record Time

Stability AI deprecated Stable Diffusion 3.0 APIs as of April 17, 2025, pushing users toward 3.5-based endpoints.

SD3 Medium faced heavy criticism for artifacts and prompt adherence issues. Version 3.5 was positioned as a correction.

But here’s the tension:

If you built a workflow on SD3.0, trained prompts around its quirks, maybe even sold client work based on that aesthetic — you don’t just “upgrade.” You recalibrate.

Model swaps aren’t like software patches.

They’re creative personality swaps.

Google: Imagen Consolidation and Feature Removal

Google deprecated Imagen 1 and 2 in June 2025 and removed them entirely in September 2025. Preview Imagen 4 models were also removed in favor of GA variants.

Even more striking:

Imagen subject and style fine-tuning features are scheduled for removal.

For creators who relied on style personalization, that’s not cosmetic. It’s foundational.

When entire capability classes disappear, workflows fracture.

What Do Users Actually Complain About?

The pattern across OpenAI, Stability, Google, Leonardo, and NovelAI communities is remarkably consistent:

- Style drift

- Loss of prompt consistency

- Output regressions

- Performance slowdowns

- Lack of changelog clarity

One Leonardo user described returning after two years and finding their “Legacy Mode” gone — including negative prompt helpers and older models like Absolute Reality.

A NovelAI user reported sudden inconsistency in V4, with artist tags overpowering each other.

The emotional undertone?

“I didn’t consent to this aesthetic shift.”

Where Does Artistly Fit Into This?

Artistly isn’t a foundational model provider like OpenAI or Google. It’s a consumer-facing creative platform layered on top of evolving model infrastructure.

And that layering is where friction multiplies.

1. Comment Moderation Allegations

Several Facebook users claim their comments pointing out AI artifacts in Artistly ads were deleted. One user in a Simpsons meme group said their critique was removed and they were blocked.

Another thread suggests comments identifying images as AI-generated were removed from promotional posts.

These are anecdotal. There’s no public moderation policy explaining the decisions.

But perception matters.

When critique disappears, trust erodes — even if the moderation was routine.

2. Style Control vs. Opaque Behavior

Artistly markets features like:

- AI Stylizer

- Image Designer V5

- Style Replicator

- Pixar-style presets

And many users love it — especially marketers needing quick visuals.

But others report confusion:

A Facebook user trying to “train your own AI” described inconsistent behavior. Adjusting a 3D character’s height caused either replication of the original or unexpected photorealistic outputs.

Their conclusion?

“It seems like everything except a system designed to train the AI to get what I want.”

That sentence captures the frustration perfectly.

Promise of control.

Experience of opacity.

3. Refund Friction and Upsell Concerns

This is where the complaints are strongest.

Across Reddit, SiteJabber, Trustpilot, and Capterra, patterns emerge:

- “Unlimited commercial access” plans allegedly locking features behind upgrades

- Refund requests requiring follow-up or public complaints before processing

- Trial cancellation confusion

- Feature mismatch vs demo expectations

Yet, in contrast:

Many users report smooth refunds within 30 days and responsive support.

So what’s the reality?

Inconsistency.

Some buyers feel supported. Others feel misled.

When your marketing promises “better than Midjourney + Canva” and “lifetime access,” expectations skyrocket.

And AI tools rarely meet sky-high expectations across all use cases.

4. Quota Enforcement and Account Bans

One user claims they were banned for “overuse” despite staying within a ~400-image daily limit.

This is a fascinating tension.

“Unlimited.”

But with invisible thresholds.

If heavy usage triggers review or enforcement, that needs transparent communication — especially in lifetime deal models.

The Bigger Pattern: Invisible Governance

Here’s the uncomfortable truth.

Model deprecations are governance decisions.

They determine:

- What aesthetic is easy

- What style is reproducible

- What workflows remain viable

- What creative identities survive

Upstream companies optimize for compute efficiency, safety alignment, or business positioning.

Downstream platforms optimize for marketing and conversions.

Creators sit in the middle.

And when something changes?

They often find out the hard way.

A Micro Case Study: The Creative Shock Cycle

Imagine this scenario:

A digital children’s book creator uses Artistly’s coloring book generator. Marketing shows crisp line art.

They purchase the commercial plan.

The output? Distorted lines and inconsistent formatting.

They request a refund.

First reply asks for clarification.

Second email receives no response.

A public review is posted.

Refund processed days later.

Is that malicious intent?

Not necessarily.

But friction compounds distrust — especially when layered over shifting model behavior upstream.

Why This Matters More Than It Looks

We’re entering an era where:

- Models update silently

- Aesthetic baselines shift

- Feature sets shrink or expand without user input

- SaaS layers abstract the true source of change

If you’re a casual user, you adapt.

If you’re a professional creator?

Your brand voice may literally be tied to a deprecated model.

And you don’t control that lifecycle.

So What Should Creators Do?

Here’s the pragmatic approach:

-

Version-Document Your Workflow

Record prompts, settings, and model versions when possible. -

Avoid Over-Branding Around One Model’s Aesthetic

If your entire identity depends on one engine’s quirks, that’s a risk. -

Test Before Scaling

Especially before client commitments. -

Read Refund Policies Closely

“Lifetime” and “Unlimited” often contain operational caveats. -

Diversify Tools

Relying on one ecosystem increases vulnerability to churn.

Final Thought: This Isn’t Just About Artistly

OpenAI, Stability, Google — they all deprecated image systems within a year.

Leonardo removed legacy modes.

Integrators dropped “obsolete” endpoints.

This isn’t one bad actor.

It’s structural volatility in a young industry.

And here’s the part nobody talks about:

The real power in generative AI isn’t just who builds the model.

It’s who decides when your model disappears.

That’s the quiet reset happening right now.

And creators would be wise to pay attention.